More ‘ghosts’ than ever are appearing in photos – thanks to digital cameras. This article is based on a presentation to the NZ Skeptics 2009 conference in Wellington, 26 September.

Since the beginnings of photography in the mid-nineteenth century people have used the medium to capture images of ghosts, both naïvely and as a hoax for commercial gain. Until the arrival of roll film late in the nineteenth century, which was more light-sensitive than earlier wet and dry plates, long exposure times sometimes resulted in spectral-looking figures accidently or intentionally appearing in photographs. Nearly all early photographs showing alleged ghosts can be explained by double exposure, long exposure, or they are recordings of staged scenes – contrivances such as the cutout fairies at the bottom of the garden in Cottingley.

As cameras became more foolproof, with mechanisms to eliminate double exposure etc, accidental ghosts in photographs became scarce. During the 1990s I carried a compact 35mm camera (an Olympus Mju-1) and shot more than five thousand photos with it. At the time I was not looking for paranormal effects such as those described below, but a quick review showed only very few strange occurrences in the photos. This century digital compact cameras have become ubiquitous and supposed ghost photos are also now common. There is a connection.

Design-wise, the basic layout of a compact digital camera isn’t much different to a compact 35mm film camera; both have a lens with a minimum focal length a little shorter than standard1 and a flash positioned close to the lens. The main differences are the lens focal lengths and the image recording medium.

A typical 35mm film camera has a semi-wide angle lens (which may also zoom well into the telephoto range but we’re not much interested in that) in the range of 28mm-38mm. A standard lens for the format is about 45mm. A digital compact camera is more likely to have a lens focal length starting out in the range of 4mm to 7mm. A 5mm lens is typical, and at a maximum aperture of around f2.8, the maximum working aperture of the lens can be less than 2mm and the stopped down aperture less than 0.5mm. (As a comparison, the maximum aperture of my Mju-1 was 35mm/f3.5=10mm.) These tiny apertures allow things very close to the lens to be captured by the recording medium (albeit out of focus) even when the lens is focussed on medium-long distance.

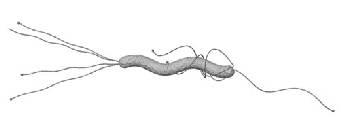

The most common photographic anomaly that is mistakenly held up as evidence of paranormal activity is the orb. While there are natural objects that are visible to the unaided eye and may photograph as orbs – that is, any small or point source light, either close by such as a lit cigarette or burning marsh gas, or distant such as the planet Venus – there are other types of orbs that only show up in photographs. You don’t see them but the camera does. These are mainly caused by airborne dust, moisture droplets, or tiny insects. In the dark, they are visible only briefly (for a millisecond or so) when illuminated by the camera flash. Dust is the most common cause of orbs in photographs, captured as an out-of-focus glow as it passes within centimetres of the camera lens, in the zone covered by the flash.

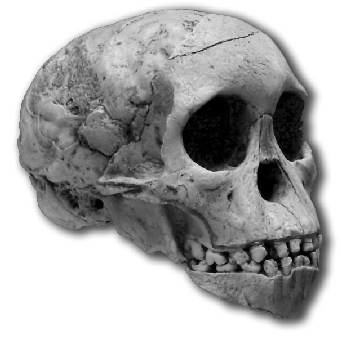

The diagram above shows how a compact digital camera, having its flash close to its short focal length lens, is able to photograph dust orbs. Most 35mm cameras won’t do this because the lens is too long in focal length to be able to create a small enough Circle of Confusion2 image of the dust and larger Single Lens Reflex (SLR)-type cameras tend to have the flash positioned farther from the lens (above) and also have larger image sensors and longer focal-length lenses which are more like a 35mm camera.

Note: a built-in flash on a digital SLR, while being closer to the lens axis, is set some distance back from the front of the lens, so the dust particles it illuminates are also out of view of the lens; they are behind it.

Specifically, a dust orb is an image of the electronic flash reflected by a mote, out of focus and appearing at the film plane as a circular image the same shape as the lens at full aperture. Most of the time when a compact camera takes a flash photo the aperture blades automatically stay out of the way to allow the widest possible lens opening. If the aperture blades close down at all, they create a diamond-shaped opening and any dust orb then becomes triangular, an effect predicted by this theory of dust orbs.

The diagram above shows a dust mote much closer to the camera lens than the focussed subject, a tree, and how the out-of-focus orb appears over the tree in the processed image, appearing the size of its Circle of Confusion at the film plane (or, in this case, digital imaging plane).

Other common photographic anomalies which are sometimes assumed to be paranormal are caused by lens flare, internal reflections, dirty lenses and objects in front of the lens. These can all occur in any type of camera. What they have in common (and this includes dust orbs), is that the phenomena exist only in the camera: they will not be seen with the unaided eye. Most of the time, photographs that are held up as paranormal were taken when nothing apparently paranormal was suspected: the anomalous effect was only noticed later upon reviewing the images.

Another confusing aspect of photographic anomalies is the loss of sense of scale, caused by the reduction of the 3D world to a 2D photograph. In the photo opposite, it appears the baby is looking at the orb, but actually the dust particle causing the orb is centimetres from the lens and the baby is looking at something else out of frame.

A variation on this is when someone senses the presence of a ghost and responds by taking a photograph. If a dust orb appears in the photo it may be assumed to be a visual representation or manifestation of the spiritual entity. Naïve paranormal investigators and other credulous types get terribly excited when this happens, and it often does during a ghost hunt. And ghost hunting is about the only type of activity that involves wandering around in the dark taking photos of nothing in particular. Now that digital cameras have large displays, photographers using the cameras during a paranormal investigation are able to immediately see dust orbs in their photos. If they believe these orbs to be paranormal, the hysteria of the investigators is fed. I’ve seen it happen. With film cameras and even with older digital cameras having smaller displays or no photo display at all, the orb effect was not usually observed until after the investigation.

Next is an enlarged part of a photo of the Oriental Bay Marina. The ghost lights in the sky are secondary images of light sources elsewhere in the photo, caused by internal reflections in the camera lens.

While operating a camera in the dark it is easy to make a mistake such as letting the camera strap or something else get in front of the lens, or put a fingerprint on the lens that will cause lens flare later. Use of the camera in the Night Photography mode will cause light trails from any light source due to the slow shutter speed (usually several seconds), combined with flash. Also, in Night mode a person moving will record as a blur combined with a sharp image from the flash, making it look like a ‘mist’ is around them.

It is important to remember that a compact digital camera will process an image file before displaying it. While a more serious camera will shoot in Raw (unprocessed) mode, most compact cameras record the image in JPEG form, which is compressed. Cellphone cameras usually apply a lot of file compression to save memory and minimise transmission time. Digital compression creates artefacts, and the effect can be seen in the enlarged photo of the dust orb (page 12). Also, digital sharpening is automatically applied, which can make a vague blur into a more definite shape, a smear into a human face.

We are all aware of the tendency to want to recognise human faces or figures in random patterns. This is a strong instinct possibly linked to infancy, picking out a parent’s face from the surrounding incomprehensible shapes. Once people see human features in a photo it is difficult to convince them that they’re looking at a random pattern and just interpreting it as a face. The effect is called pareidolia, sometimes referred to as matrixing, or the figure as a simulacrum.

The ‘Face in the Middle’ photo, below, is an example of pareidolia. The third face appearing between the boy and girl is several background elements combining to produce the simulacrum. The low resolution and large amount of compression in this cellphone photo exacerbate the effect.

While we all know it is easy to fake a ghost photo using in-camera methods such as long exposure or multiple exposure, or in post-production using imaging software such as Photoshop, current camera technology makes it hardly necessary. It is far easier to choose to use a compact digital camera or cellphone camera and allow it to produce the anomalous effects automatically: one reason why ‘ghost hunters’ use them. Then one can claim ignorance and honestly say they didn’t mess with the photo, it is exactly how the camera saw it. Having done a fair amount of ghost hunting myself, it is tempting to use a digital compact camera with the knowledge that while it is highly unlikely an actual ghost will be photographed, a certain number of anomalous photographs will result which will at least spice up the investigation report!3

In my experience of analysing photographs, I have found that some people are prepared to accept a rational explanation of what they thought may have been a photograph of a paranormal event. Others don’t want to hear anything rational; they’ve made up their mind that there’s a ghost in the photo and that’s the end of it. Having looked at a large number of photographs that allegedly show ghosts, I haven’t yet come across one that doesn’t fall into one of the general categories of photographic anomaly referred to above or isn’t a probable fake.

While I think that people do have ghost-like experiences (an opinion based mainly on the vast accumulation of published anecdotal evidence but also on some personal experiences that remain unexplained), it is probably not possible to photograph a ghost as such using any known method of photography (including pictures using the EM spectrum outside visible light). Photographs are not considered hard evidence of anything much these days anyway, because it is widely known that even a moderately skilled photographer or Photoshop operator can create a realistic looking picture of almost any fantasy. In paranormal matters a photograph can at best be considered circumstantial evidence requiring backup from other types of hard data and witness accounts to lend it evidential weight.

Footnotes:

-

A standard lens has a focal length close to the diagonal measurement of the film or digital sensor. This lens renders objects in correct proportion according to their distance – a neutral perspective, neither compressed (as by longer focal length, or ‘telephoto’ lenses) nor exaggerated (as by shorter focal length, wide-angle lenses).

-

Circle of Confusion (COC) is a term in optics for the image of a point of light that is in or out of focus at the imaging plane of a lens. Each point of an object forms an image circle of a diameter relative to its degree of sharp focus, with an in-focus point forming a tiny COC that effectively appears as a point. An Infinite number of larger, overlapping COCs form the blurry (unfocussed) areas of an image. This is the basis of Depth of Field in photography.

-

In Strange Occurrences we use digital photography in much the same way as police photographers, that is, to record details of a location for later reference. Also, long exposures with a digital SLR on a tripod can show things the unaided eye cannot quite make out in low light, such as reflected and/or diffracted light patterns from external light sources that may appear somewhat ghost-like. Captions: The placing of the flash close to the short focal length lens of a digital camera means that dust motes can be illuminated as ‘orbs’.