There may indeed be a place for creationism in the science classroom, but not the way the creationists want. This article is based on a presentation to the 2011 NZ Skeptics Conference.

We live in a time when science features large in our lives, probably more so than ever before. It’s important that people have at least some understanding of how science works, not least so that they can make informed decisions when aspects of science impinge on them. Yet this is also a time when pseudoscience seem to be on the increase. Some would argue that we simply ignore it. I suggest that we put it to good use and use pseudoscience to help teach about the nature of science – something that Jane Young has done in her excellent book The Uncertainty of it All: Understanding the Nature of Science.

The New Zealand Curriculum (MoE, 2007) makes it clear that there’s more to studying science than simply accumulating facts:

Science is a way of investigating, understanding, and explaining our natural, physical world and the wider universe. It involves generating and testing ideas, gathering evidence – including by making observations, carrying out investigations and modeling, and communicating and debating with others – in order to develop scientific knowledge, understanding and explanations (ibid., p28).

In other words, studying science also involves learning about the nature of science: that it’s a process as much as, or more than, a set of facts. Pseudoscience offers a lens through which to approach this.

Thus, students should be being encouraged to think about how valid, and how reliable, particular statements may be. They should learn about the process of peer review: whether a particular claim has been presented for peer review; who reviewed it; where it was published. There’s a big difference between information that’s been tested and reviewed, and information (or misinformation) that simply represents a particular point of view and is promoted via the popular press. Think ‘cold fusion’, the claim that nuclear fusion could be achieved in the lab at room temperatures. It was trumpeted to the world by press release, but subsequently debunked as other researchers tried, and failed, to duplicate its findings.

A related concept here is that there’s a hierarchy of journals, with publications like Science at the top and Medical Hypotheses at the other end of the spectrum. Papers submitted to Science are subject to stringent peer review processes – and many don’t make the grade – while Medical Hypotheses seems to accept submissions uncritically, with minimal review, for example a paper suggesting that drinking cows’ milk would raise odds of breast cancer due to hormone levels in milk – despite the fact that the actual data on hormone titres didn’t support this.

This should help our students develop the sort of critical thinking skills that they need to make sense of the cornucopia of information that is the internet. Viewing a particular site, they should be able to ask – and answer! – questions about the source of the information they’re finding, whether or not it’s been subject to peer review (you could argue that the internet is an excellent ‘venue’ for peer review but all too often it’s simply self-referential), how it fits into our existing scientific knowledge, and whether we need to know anything else about the data or its source.

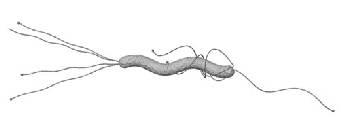

An excellent example that could lead to discussion around both evolution and experimental design, in addition to the nature of science, is the on-line article Darwin at the drugstore: testing the biological fitness of antibiotic-resistant bacteria (Gillen & Anderson, 2008). The researchers wished to test the concept that a mutation conferring antibiotic resistance rendered the bacteria possessing it less ‘fit’ than those lacking it. (There is an energy cost to bacteria in producing any protein, but whether this renders them less fit – in the Darwinian sense – is entirely dependent on context.)

The researchers used two populations of the bacterium Serratia marcescens: an ampicillin-resistant lab-grown strain, which produces white colonies, and a pink, non-resistant (‘wild-type’) population obtained from pond water. ‘Fitness’ was defined as “growth rate and colony ‘robustness’ in minimal media”. After 12 hours’ incubation the two populations showed no difference in growth on normal lab media (though there were differences between four and six hours), but the wild-type strain did better on minimal media. It is hard to judge whether the difference was of any statistical significance as the paper’s graphs lack error bars and there are no tables showing the results of statistical comparisons – nonetheless, the authors describe the differences in growth as ‘significant’.

Their conclusion? Antibiotic resistance did not enhance the fitness of Serratia marcescens:

… wild-type [S.marcescens] has a significant fitness advantage over the mutant strains due to its growth rate and colony size. Therefore, it can be argued that ampicillin resistance mutations reduce the growth rate and therefore the general biological fitness of S.marcescens. This study concurs with Anderson (2005) that while mutations providing antibiotic resistance may be beneficial in certain, specific, environments, they often come at the expense of pre-existing function, and thus do not provide a mechanism for macroevolution (Gillen & Anderson, 2008).

Let’s take the opportunity to apply some critical thinking to this paper. Students will all be familiar with the concept of a fair test, so they’ll probably recognise fairly quickly that such a test was not performed in this case: the researchers were not comparing apples with apples. When one strain of the test organism is lab-bred and not only antibiotic-resistant but forms different-coloured colonies from the pond-dwelling wild-type, there are a lot of different variables in play, not just the one whose effects are supposedly being examined.

In addition, and more tellingly, the experiment did not test the fitness of the antibiotic-resistance gene in the environment where it might convey an advantage. The two Serratia marcescens strains were not grown in media containing ampicillin! Evolutionary biology actually predicts that the resistant strain would be at a disadvantage in minimal media, because it’s using energy to express a gene that provides no benefit in that environment, so will likely be short of energy for other cellular processes. (And, as I commented earlier, the data do not show any significant differences between the two bacterial strains.)

What about the authors’ affiliations, and where was the paper published? Both authors work at Liberty University, a private faith-based institution with strong creationist leanings. And the article is an on-line publication in the ‘Answers in Depth’ section of the website of Answers in Genesis (a young-earth creationist organisation) – not in a mainstream peer-reviewed science journal. This does suggest that a priori assumptions may have coloured the experimental design.

Other clues

It may also help for students to learn about other ways to recognise ‘bogus’ science, something I’ve blogged about previously (see Bioblog – seven signs of bogus science). One clue is where information is presented via the popular media (where ‘popular media’ includes websites), rather than offered up for peer review, and students should be asking, why is this happening?

The presence of conspiracy theories is another warning sign. Were the twin towers brought down by terrorists, or by the US government itself? Is the US government deliberately suppressing knowledge of a cure for cancer? Is vaccination really for the good of our health or the result of a conspiracy between government and ‘big pharma’ to make us all sick so that pharmaceutical companies can make more money selling products to help us get better?

“My final conclusion after 40 years or more in this business is that the unofficial policy of the World Health Organisation and the unofficial policy of Save the Children’s Fund and almost all those organisations is one of murder and genocide. They want to make it appear as if they are saving these kids, but in actual fact they don’t.” (Dr A. Kalokerinos, quoted on a range of anti-vaccination websites.)

Conspiracy theorists will often use the argument from authority, almost in the same breath. It’s easy to pull together a list of names, with PhD or MD after them, to support an argument (eg palaeontologist Vera Scheiber on vaccines). Students could be given such a list and encouraged to ask, what is the field of expertise of these ‘experts’? For example, a mailing to New Zealand schools by a group called “Scientists Anonymous” offered an article purporting to support ‘intelligent design’ rather than an evolutionary explanation for a feature of neuroanatomy, authored by a Dr Jerry Bergman. However, a quick search indicates that Dr Bergman has made no recent contributions to the scientific literature in this field, but has published a number of articles with a creationist slant, so he cannot really be regarded as an expert authority in this particular area. Similarly, it is well worth reviewing the credentials of many anti-vaccination ‘experts’ – the fact that someone has a PhD by itself is irrelevant; the discipline in which that degree was gained, is important. (Observant students may also wonder why the originators of the mailout feel it necessary to remain anonymous…)

Students also need to know the difference between anecdote and data. Humans are pattern-seeking animals and we do have a tendency to see non-existent correlations where in fact we are looking at coincidences. For example, a child may develop a fever a day after receiving a vaccination. But without knowing how many non-vaccinated children also developed a fever on that particular day, it’s not actually possible to say that there’s a causal link between the two.

A question of balance

Another important message for students is that there are not always two equal sides to every argument, notwithstanding the catch cry of “teach the controversy!” This is an area where the media, with their tendency to allot equal time to each side for the sake of ‘fairness’, are not helping. Balance is all very well, but not without due cause. So, apply scientific thinking – say, to claims for the health benefits of sodium bicarbonate as a cure for that fungal-based cancer (A HREF=”http://www.curenaturalicancro.com”>www.curenaturalicancro.com). Its purveyors make quite specific claims concerning health and well-being – drinking sodium bicarbonate will cure cancer and other ailments by “alkalizing” your tissues, thus countering the effects of excess acidity! How would you test those claims of efficacy? What are the mechanisms by which drinking sodium bicarbonate (or for some reason lemon juice!) – or indeed any other alternative health product – is supposed to have its effects? (Claims that a ‘remedy’ works through mechanisms as yet unknown to science don’t address this question, but in addition, they presuppose that it does actually work.) In the new Academic Standards there’s a standard on homeostasis, so students could look at the mechanisms by which the body maintains a steady state in regard to pH.

If students can learn to apply these tools to questions of science and pseudoscience, they’ll be well equipped to find their way through the maze of conflicting information that the modern world presents, regardless of whether they go on to further study in the sciences.

References

- Gillen, A.G. & Anderson, S. (2008): www.answersingenesis.org/articles/aid/v2/n1/Darwin-at-drugstore

- Ministry of Education (2007):The New Zealand Curriculum. nzcurriculum.tki.org.nz/Curriculum-documents/The-New-Zealand-Curriculum

- Young, J. (2010): The uncertainty of it all: understanding the nature of science. Triple Helix Resources Ltd.

- 10:23 Campaign (2011):www.1023.org.uk